4.3 Parallel and Distributed Computing

7 min read•june 18, 2024

Minna Chow

AP Computer Science Principles ⌨️

80 resourcesSee Units

Traditionally, programs are made with sequential computing in mind. That's when program instructions are processed one at a time.

However, as the demand for computers to become faster increased, sequential processing wasn't able to keep up. This is in part because you can only make a single processor so fast before the amount of heat it's generating literally causes it to melt.

A very accurate representation of the melting process; Image source: cicoGIFs

This problem led to the creation of new models of computing known as parallel and distributed computing.

Parallel and Distributed Computing Definitions

Parallel computing is where a program is broken into smaller sequential computing operations. Some of these operations are done at the same time using multiple processors. Most modern computers use parallel computing systems, with anywhere from 4 to 24 cores (or processors) running at the same time.

There are several advantages to parallel computing.

- Performing tasks at the same time helps to save a lot of time, and money as well.

- Parallel computing solutions are also able to scale more effectively than sequential solutions. This means if there's a change in scale (ex: more instructions to process than before), these solutions will handle the change better than sequential computing models would.

Distributed computing, on the other hand, is where multiple devices are used to run a program. These devices can be in different locations around the world, communicating by sending messages to each other. 🌎

With a distributed computing model, two "heads" are better than one. You get the power of two (or more) computers working on the same problem. Using distributed computing allows people to solve problems that they wouldn't be able to otherwise, due to a lack of storage or needing too much processing time otherwise. Modern search engines, applications that store data somewhere separate from the user's computer like Gmail and Google Docs, and *sigh* cryptocurrency mining all use distributed computing.

Due to their increased capacities, a parallel or distributed computing model can process large data sets or solve complex problems faster than a sequential computing model can. They come with the added perk of not melting your computer while they're doing it.

Execution Time, Efficiency, and Speedup Calculations

The AP CSP test will have conceptual questions about parallel and distributed computing, but they'll also have some calculation questions, too. The test may ask you to calculate the execution time of a sequential or a parallel computing solution. They may also ask you to compare the efficiency of two solutions. Finally, the test may ask you to calculate the speedup of a certain parallel solution.

Calculating Execution Time

Sequential Solutions

A sequential solution takes as long as the sum of all steps in the program. For example, if your program has three steps that take 40, 50, and 80 seconds respectively, the sequential solution would take 170 seconds to complete.

Parallel Computing Solutions

The speed of a parallel computing solution, on the other hand, depends on the number of cores involved. The more cores, the faster (to an extent) the solution is.

One of the best ways to understand this calculation is with examples. Going back to our original example with those three steps of 40, 50, and 80 seconds, a parallel computing solution where two processors are running would take 90 seconds to complete at its fastest.

Why is that?

Well, it's important to first acknowledge that we're working under the assumption that all steps are independent. The 40-second step, for example, doesn't depend on the result of the 80-second step in order to start. Therefore, the processors are free to do the steps in any order or combination.

Generally, you'll be looking for the minimum possible time, so we're going to want to do the longer processes first and at the same time. Let's call the two Processors Processor 1 and Processor 2. To minimize the amount of time needed, Processor 2 should do the 80-second step, and Processor 1 should do the 40 and 50-second steps sequentially. Processor 1 would complete the 40-second and the 50-second step in 90 seconds (taking the sum of each sequential step) and Processor 2 would complete the 80-second step in... well, 80 seconds.

Even though Processor 2 only took 80 seconds, it still has to "wait" for Processor 1 before the solution is complete.

In the example above, it was Processor 1’s 40 and 50-second sequential tasks that determined the final speed of the solution. However, this isn't always the case. In some cases, the longest parallel task will determine the final speed. If the task Processor 2 needed to do had taken 100 seconds, then the computing solution would have taken 100 seconds in total because of it.

Still confused? Check out the practice problem below. Practice makes perfect with these questions!

Clearly enough, the parallel computing solution is faster. A way to quantify just how much faster is called the speedup.

Calculating the Speedup

The speedup of a parallel solution is calculated by dividing the time it took to complete the task sequentially by the time it took to complete the task in parallel.

In the example above, that would be 170 (the time it took sequentially) divided by 90, or 1.88.

Speed Limits

Note that a parallel computing model can only be as fast as the speed of its sequential portions. Some steps need to be done sequentially, such as steps that require data from earlier steps in order to begin, and this will affect the overall speed.

For a non-programming example of this, imagine that some students are making a slideshow. One student is in charge of turning in the slideshow at the end. That student has to wait until everyone else is done to turn in the slideshow—that step can't be done in parallel with the steps it takes to work on the slideshow. It is not an independent step.

Before you start a speed calculation problem, make sure you know whether or not all steps are independent. Don't just assume they all are. (The question should tell you if they are or not.)

Eventually, the speedup effect of adding more parallel processors will wane the more and more you add them. This is because, inevitably, you'll need to wait on something that parallel computing can't speed up: either for sequential steps to complete or for other overhead such as communication time between processors. ⌚

Example Problem

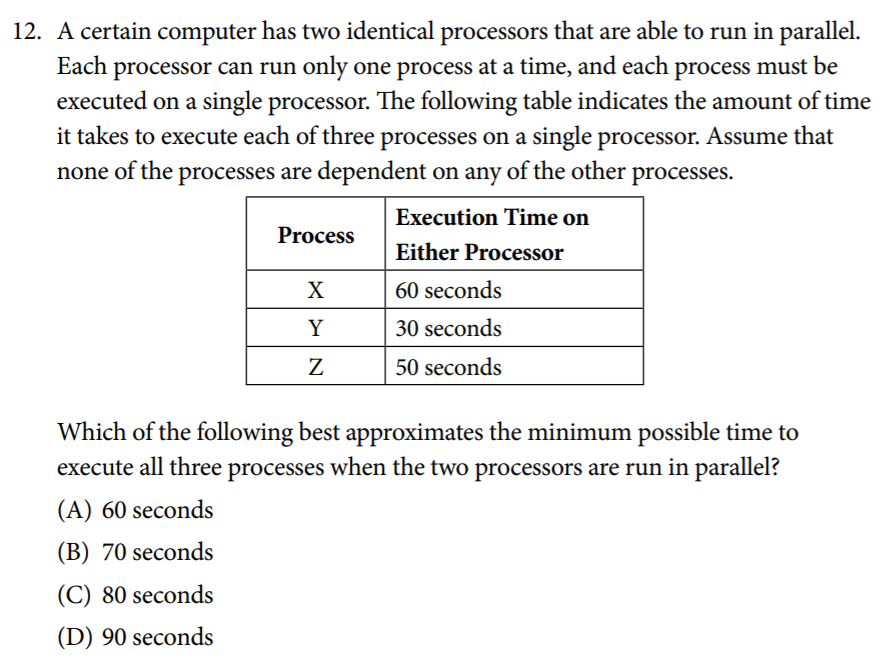

Now, let's try this example problem, straight from page 177 of the College Board's CED:

Answer

The answer is C: 80 seconds.

The easiest way to think of this is to walk through how the processors will operate.

We know that the computer has two processors, and that each processor can only run one process at a time. We have three processes to finish: a 60-second, 30-second and 50-second one.

None of the processes are dependent on each other, which means that they're free to run in any order and to run parallel to each other. In other words, you don't need to wait for any of the processes to finish before you start another.

Let's call these processors Processor A and Processor B.

So, what would the processors do?

- The question tells us we're looking for the minimum possible time, so we're going to want to do the longer processes first and at the same time. When computing begins, Processor A starts running the 60-second process and Processor B starts running the 50-second process.

- Processor B finishes the 50-second process and begins the 30-second process while Processor A is still running the 60-second process.

- Processor A finishes running the 60-second process and finds that there aren't any more processes to run. At this point, 60 seconds have passed overall, and Processor B is 10 seconds into running the 30-second process.

- Processor B finishes running 20 seconds later.

(It might help to draw a picture if you're having trouble keeping track of all the processes.)

Looking at this list, we can see that it takes 60 + 20 seconds to complete everything, which will add up to make 80 seconds in total.

Another way to think of this is to think about how long it will take the processor with the most work to do to finish its work. One of the processors has to complete both the 50-second and 30-second processes in series (while the other one only needs to do one, 60-second process), which adds to make 80 seconds. The 60-second step, done in parallel, is shorter than the time needed. That means it occurs while the sequential step is still running and doesn't affect the total time.

In Conclusion...

There we go! Another Big Idea squared away.

In Big Idea 5, we'll be talking about the impacts that computing devices and networks have had on our day-to-day lives.

Browse Study Guides By Unit

🕹Unit 1 – Creative Development

⚙️Unit 2 – Data

📱Unit 3 – Algorithms & Programming

🖥Unit 4 – Computer Systems & Networks

⌨️Unit 5 – Impact of Computing

🧐Exam Skills

Fiveable

Resources

© 2025 Fiveable Inc. All rights reserved.